Shihao Wang

I am a second-year Ph.D. student in the Department of Computing at The Hong Kong Polytechnic University, advised by Prof. Lei Zhang. My research spans 3D perception and planning for autonomous vehicles and robotics, multimodal foundation models, streaming video understanding, and test-time adaptation for agentic systems, with publications at top conferences including CVPR, ICCV, and AAAI. I also collaborate closely with NVIDIA Research and have contributed to both foundational multimodal models and autonomous driving systems.

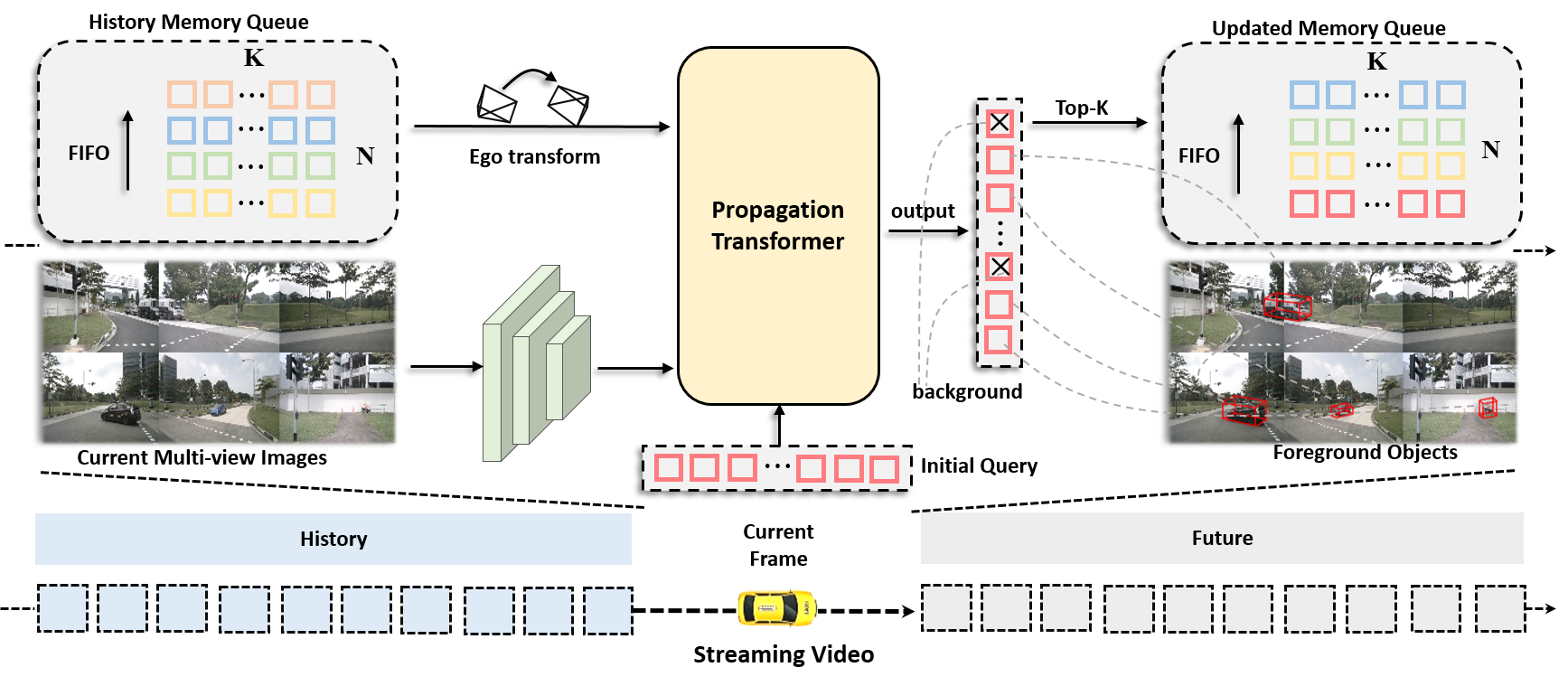

My work includes the Eagle‑VLM series, powering NVIDIA’s commercial multimodal models and the Isaac GR00T humanoid robotics platform, as well as OmniDrive and Hydra‑MDP, which connect 3D perception with multimodal reasoning for end‑to‑end autonomous driving and have earned top awards at the CVPR 2024 Autonomous Driving Challenge. I am also the author of StreamPETR (ICCV’23), a streaming paradigm for camera‑based 3D perception that reached #1 among online methods on nuScenes and has been widely adopted in both academia and industry.

Looking ahead, I am developing memory‑centric, self‑evolving AI agents capable of persistent long‑horizon reasoning across virtual and physical domains, aiming to push beyond task‑specific solutions toward truly reliable general‑purpose autonomy.

🔥 News

- 2025.09: 🎉 Two papers have been accepted to NeurIPS 2025.

- 2025.06: 🎉 Contributed to the development and public release of Groot-N1.5.

- 2025.06: 🎉 Hydra-Next accepted to ICCV 2025.

- 2025.02: 🎉 OmniDrive accepted to CVPR 2025.

- 2024.11: 🎉 Eagle accepted to ICLR 2025.

- 2024.06: 🏆 1st Place in End-to-End Driving at Scale, 2nd Place in Driving with Language, CVPR 2024 Autonomous Driving Grand Challenge.

- 2023.11: 🎉 Far3D accepted to AAAI 2024.

- 2023.10: 🎉 Joined NVIDIA AV Applied Research Group as Research Intern.

- 2023.02: 🎉 StreamPETR accepted to ICCV 2023.

- 2022.10: 🎉 Joined MEGVII Technology Foundation Model Group as Research Intern.

📝 Publications

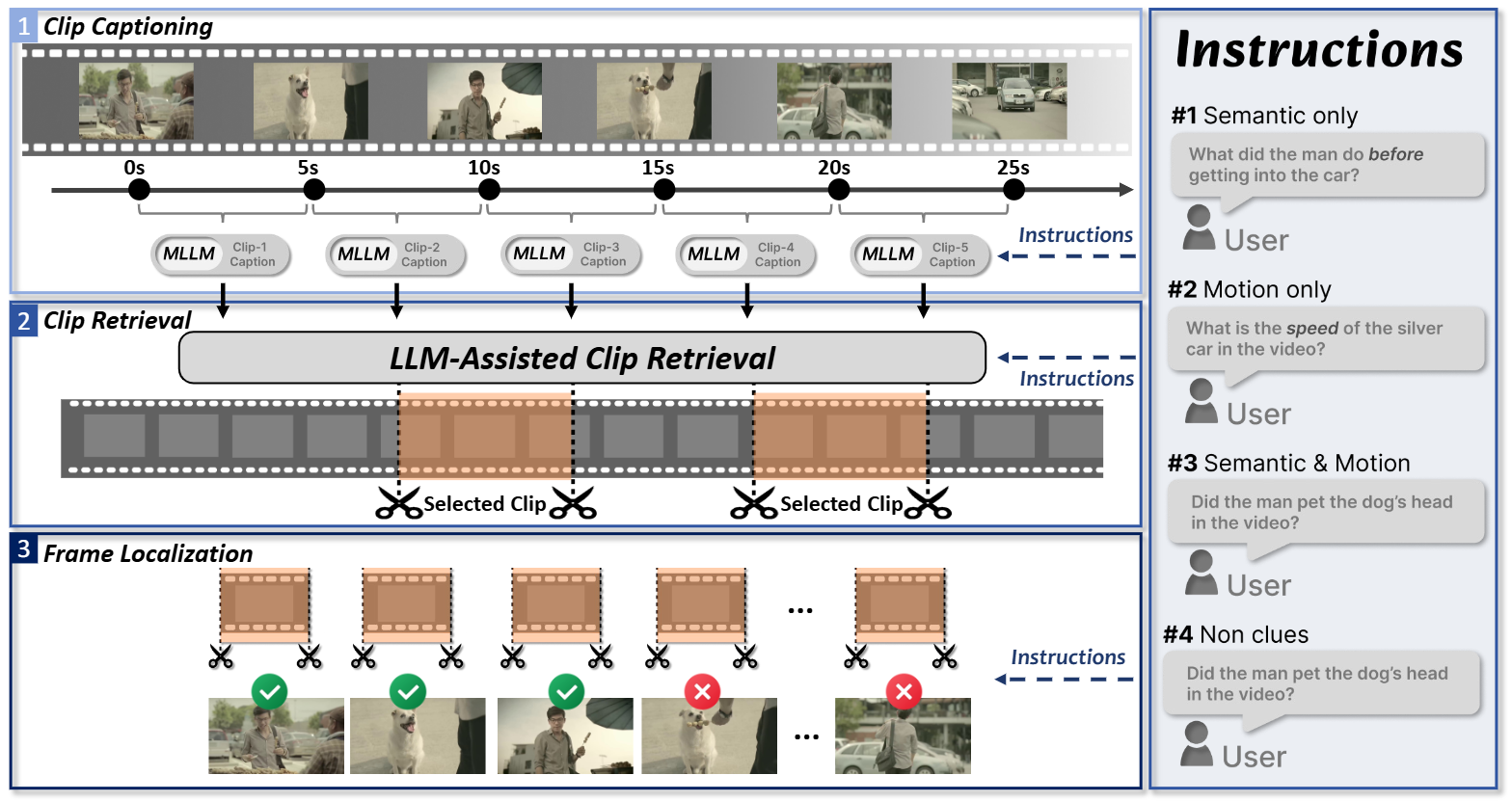

VideoITG: Multimodal Video Understanding with Instructed Temporal Grounding

Shihao Wang, Guo Chen, De-an Huang, Zhiqi Li, Minghan Li, Guilin Liu, Jose M. Alvarez, Lei Zhang and Zhiding Yu.

arXiv 2025

GR00T N1.5 An Improved Open Foundation Model for Generalist Humanoid Robots

Johan Bjorck, Valts Blukis, Fernando Castañeda, Nikita Cherniadev, Xingye Da, Runyu Ding, Linxi “Jim” Fan, Yu Fang, Dieter Fox, Fengyuan Hu, Spencer Huang, Joel Jang, Xiaowei Jiang, Kaushil Kundalia, Jan Kautz, Zhiqi Li, Kevin Lin, Zongyu Lin, Loic Magne, Yunze Man, Ajay Mandlekar, Avnish Narayan, Soroush Nasiriany, Scott Reed, You Liang Tan, Guanzhi Wang, Jing Wang, Qi Wang, Shihao Wang, Jiannan Xiang, Yuqi Xie, Yinzhen Xu, Seonghyeon Ye, Zhiding Yu, Yizhou Zhao, Zhe Zhang, Ruijie Zheng, Yuke Zhu

Blog 2025

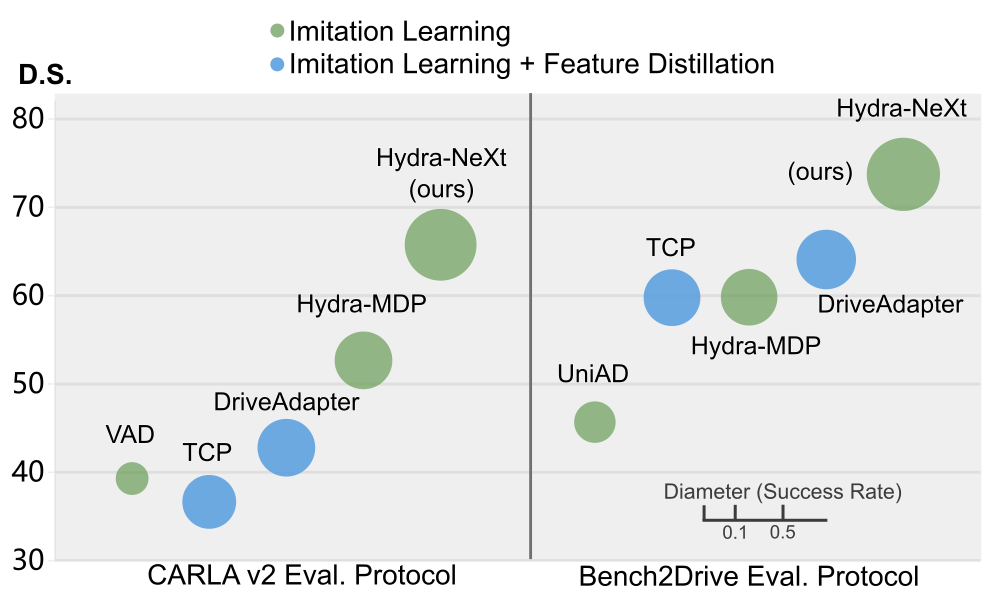

Hydra-NeXt: Robust Closed-Loop Driving with Open-Loop Training

Zhenxin Li, Shihao Wang, Shiyi Lan, Zhiding Yu, Zuxuan Wu and Jose M. Alvarez.

ICCV 2025

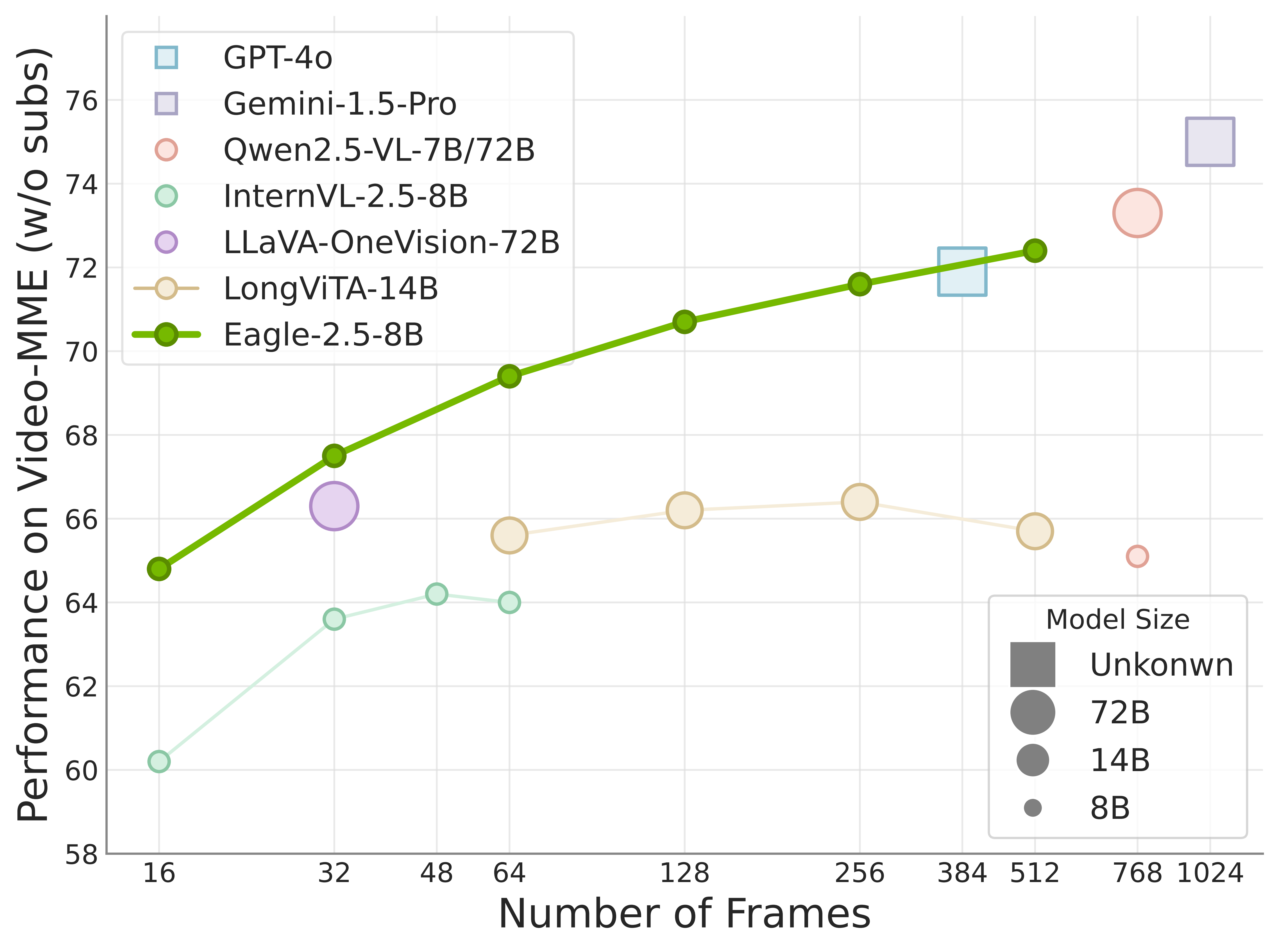

Eagle 2.5: Boosting Long-Context Post-Training for Frontier Vision-Language Models

Guo Chen, Zhiqi Li, Shihao Wang, Jindong Jiang, Yicheng Liu, Lidong Lu, De-An Huang, Wonmin Byeon, Matthieu Le, Tuomas Rintamaki, Tyler Poon, Max Ehrlich, Tuomas Rintamaki, Tyler Poon, Tong Lu, Limin Wang, Bryan Catanzaro, Jan Kautz, Andrew Tao, Zhiding Yu, Guilin Liu

NIPS 2025

Eagle 2: Building Post-Training Data Strategies from Scratch for Frontier Vision-Language Models

Zhiqi Li, Guo Chen, Shilong Liu, Shihao Wang, Vibashan VS, Yishen Ji, Shiyi Lan, Hao Zhang, Yilin Zhao, Subhashree Radhakrishnan, Nadine Chang, Karan Sapra, Amala Sanjay Deshmukh, Tuomas Rintamaki, Matthieu Le, Ilia Karmanov, Lukas Voegtle, Philipp Fischer, De-An Huang, Timo Roman, Tong Lu, Jose M. Alvarez, Bryan Catanzaro, Jan Kautz, Andrew Tao, Guilin Liu and Zhiding Yu.

arXiv 2025

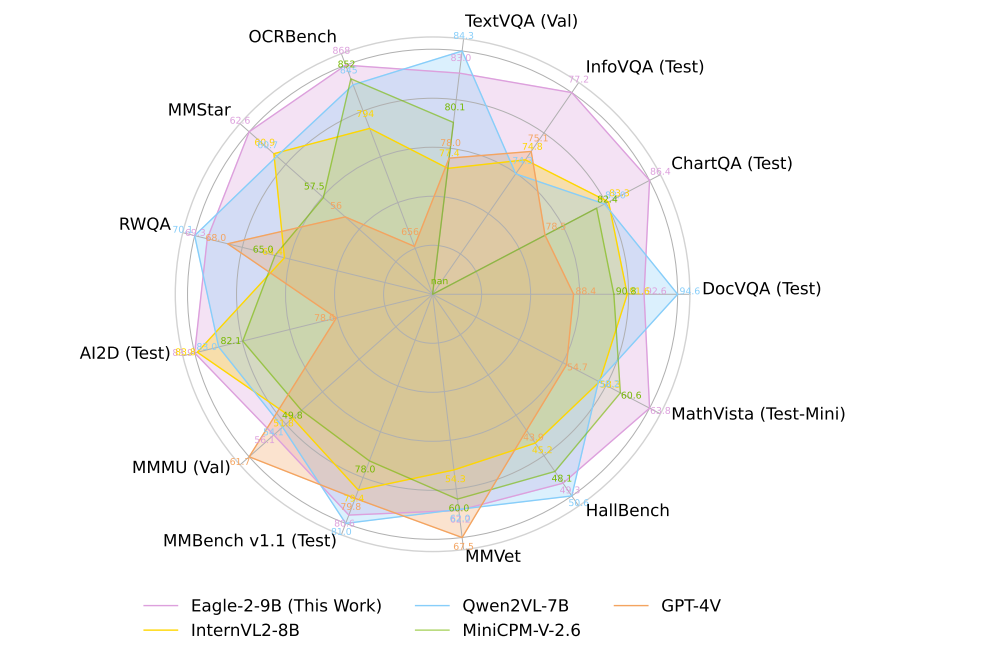

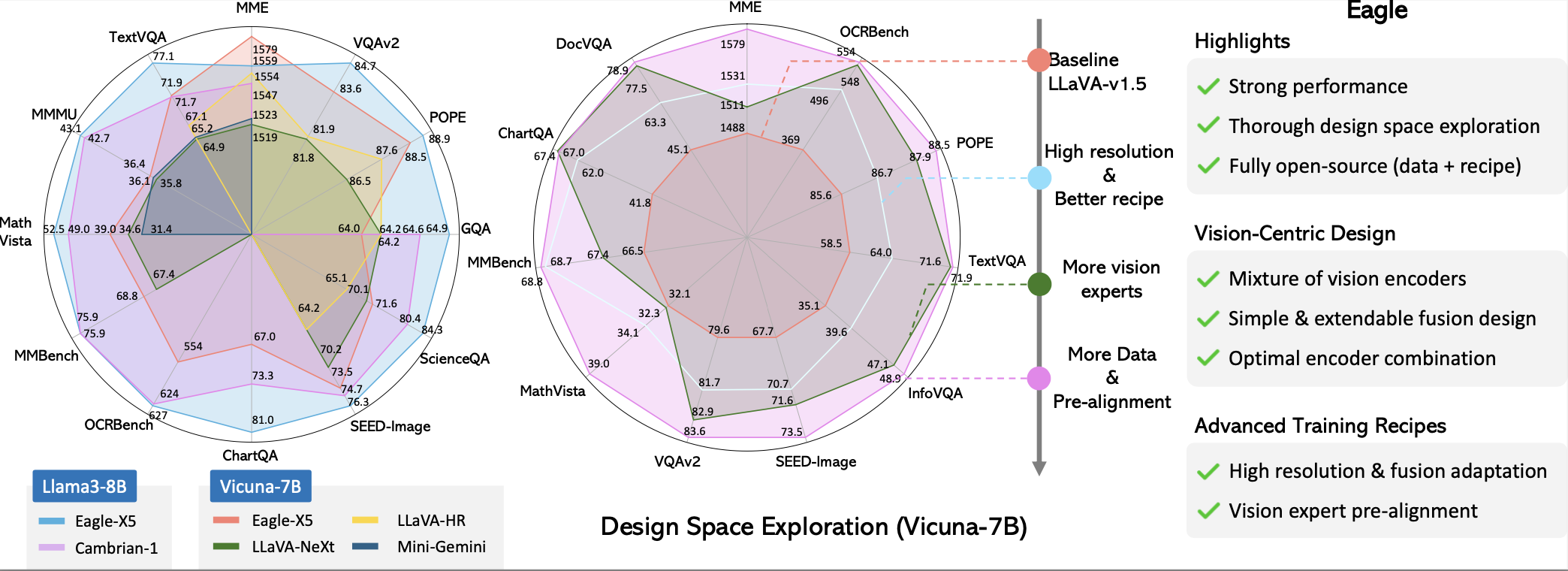

Eagle: Exploring the design space for multimodal llms with mixture of encoders

Min Shi, Fuxiao Liu, Shihao Wang, Shijia Liao, Subhashree Radhakrishnan, Yilin Zhao, De-An Huang, Hongxu Yin, Karan Sapra, Yaser Yacoob, Humphrey Shi, Bryan Catanzaro, Andrew Tao, Jan Kautz, Zhiding Yu and Guilin Liu.

ICLR 2025

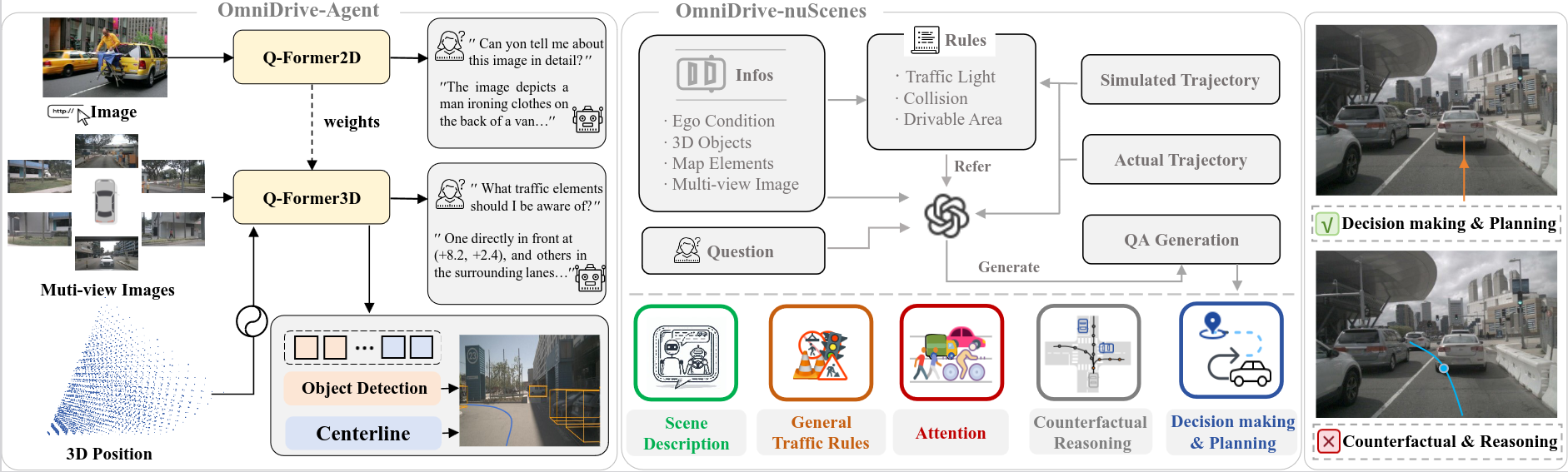

OmniDrive: A holistic vision-language dataset for autonomous driving with counterfactual reasoning

Shihao Wang, Zhiding Yu, Xiaohui Jiang, Shiyi Lan, Min Shi, Nadine Chang, Jan Kautz, Ying Li and Jose M. Alvarez.

CVPR 2025

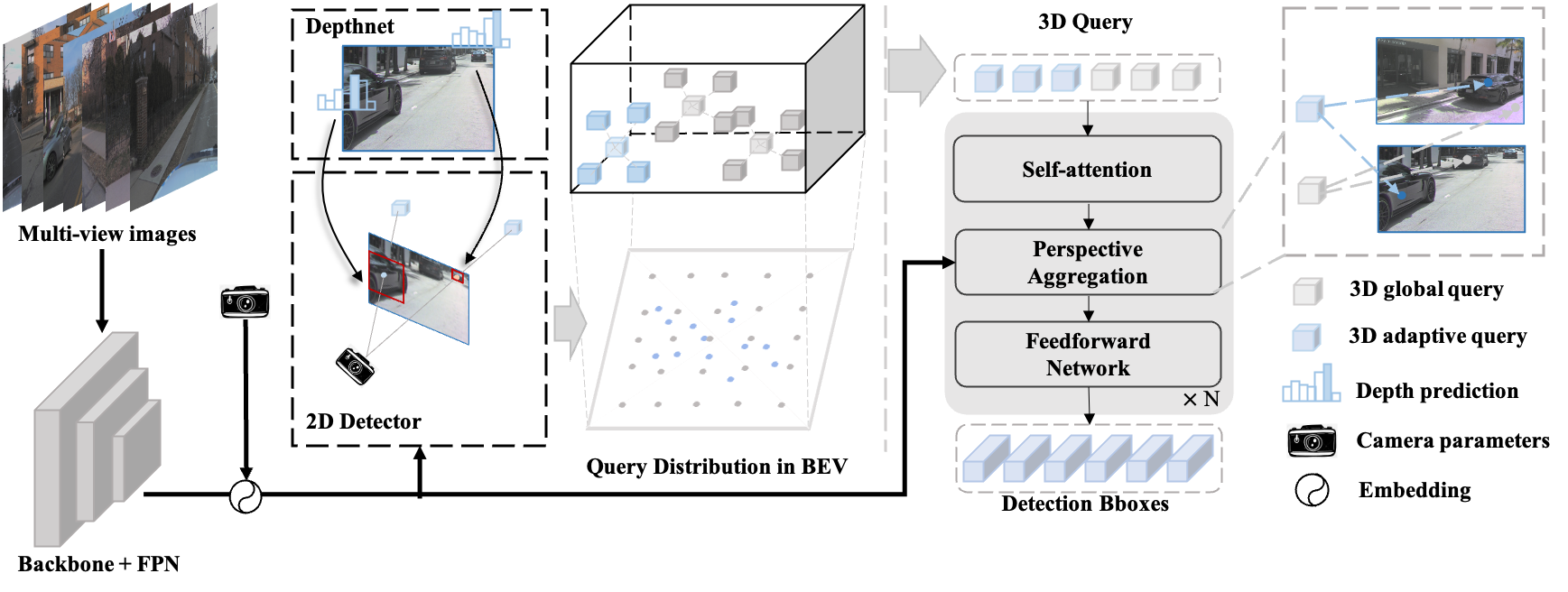

Far3D: Expanding the horizon for surround-view 3d object detection

Xiaohui Jiang, Shuanglin Li, Yingfei Liu, Shihao Wang, Fan Jia, Tiancai Wang, Lijin Han and Xiangyu Zhang.

AAAI 2024

StreamPETR: End-to-End Multi-View 3D Object Detection with Transformers

Shihao Wang, Yingfei Liu, Tiancai Wang, Ying Li and Xiangyu Zhang.

ICCV 2023

🎖 Honors and Awards

- 2024.06 Winner, CVPR24 Challenge on End-to-End Driving at Scale

- 2024.06 2nd Place, CVPR24 Challenge on Driving with Language

- 2023.11 1st Place, nuScenes leaderboard on camera-only 3D object tracking

📖 Educations

- 2024.09 - Present, Ph.D. in Computer Vision, Department of Computing, Hong Kong Polytechnic University (PolyU), Hong Kong

- 2021.09 - 2024.06, M.Sc. in Vehicle Engineering, Beijing Institute of Technology (BIT), Beijing, GPA: 89.5/100

- 2017.09 - 2021.06, B.Sc. in Vehicle Engineering, Beijing Institute of Technology (BIT), Beijing, GPA: 87.5/100

💬 Invited Talks

- 2024.07, OmniDrive: Advancing autonomous driving 3D perception, reasoning, and planning with large models

- 2023.12, Sparse vectorized representation for long-term temporal modeling

- 2023.08, How can BEV perception be achieved without BEV features?

💻 Internships

- 2023.10 - 2025.01, NVIDIA, Beijing, China.

- Research Intern, AV Applied Research Group. Contributed to Eagle VLM family, VideoITG, HydraMDP, OmniDrive, GR00T N1.5.

- 2022.04 - 2023.07, MEGVII Technology, Beijing, China.

- Research Intern, Foundation Model Group (PI: Xiangyu Zhang). Developed StreamPETR, Far3D, etc.